Today’s AI investment craze suffers from the same flawed assumptions that sparked past bubbles.

Without a course correction, the U.S. economy faces a self-inflicted bust.

In the mid-2000s, Wall Street’s best and brightest were packaging subprime loans into complex securities and assuring the world they were safe. Regulators nodded. Rating agencies blessed them. Investors snapped them up.

Few asked the obvious question: What if the assumptions behind these instruments are wrong?

Today, a similar blind spot is spreading through the economy – not through mortgages, but through artificial intelligence (AI).

Everyone from Big Tech giants to startup founders, institutional investors, and Washington policymakers is convinced that artificial intelligence is a revolutionary technology, and that big capital expenditures (capex) today will lead to countless industrywide profits in the future.

But there’s a problem: Demand in value-added, real-world applications is unlikely to be large enough to justify all this investment.

The demand that AI promoters constantly highlight is drawn from Large language model (LLM) training sessions and queries – neither of which is associated with much sustainable, real-world revenue.

Much of the revenue being booked in this ecosystem ultimately comes from a limited pool of venture capital (VC) funds.

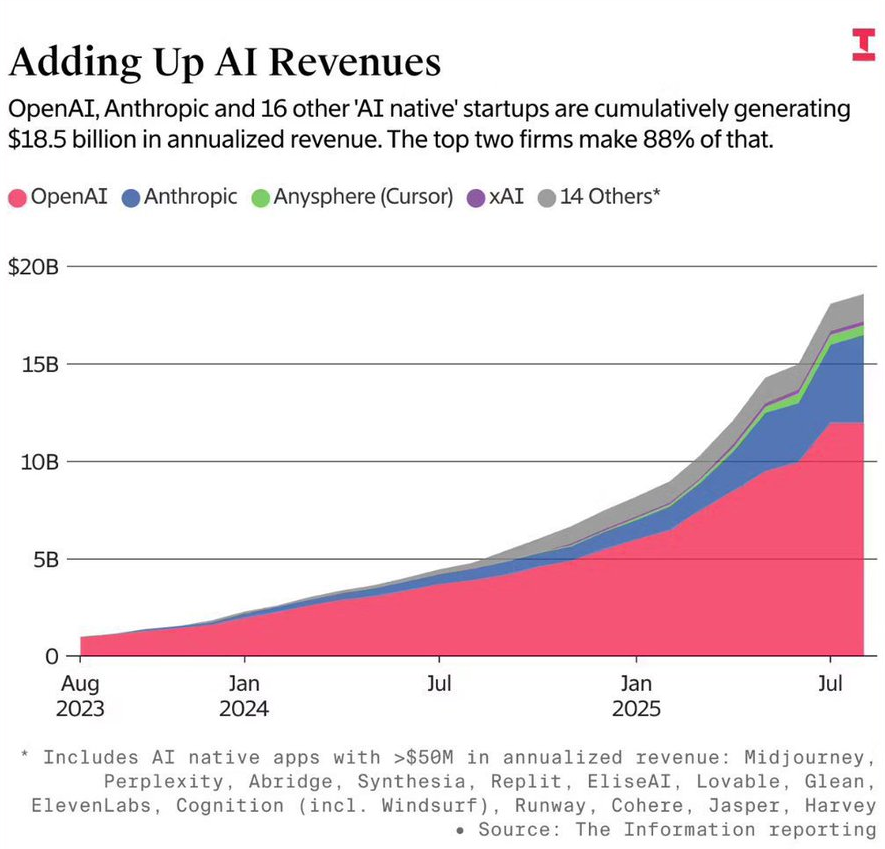

A recent story from The Information estimated that these LLM companies generate roughly $18.5 billion in annualized revenue.

Ironically, The Information was trying to rebut the claims from an MIT Media Lab report that concluded 95% of businesses are seeing no return on AI pilot projects.

It’s ironic because $18.5 billion in annualized revenue is peanuts relative to the astonishingly large amount of capital poured into these companies.

The revenue streams in the chart above are also not very valuable because LLM companies are not showing positive operating leverage.

Plenty of evidence indicates that costs scale alongside revenue at LLM companies. Heavy users burn lots of electricity and shorten Nvidia chips’ lives in their usage of the models. And free users will just switch to a competing LLM if ever required to pay a subscription fee.

From Groupthink to Boom-Bust

Today’s AI investment mania has two key blind spots: the fallacy of composition and adverse selection.

Let’s break those down.

The fallacy of composition is the mistaken belief that what’s true for one player is true for all. If one company like Microsoft makes money selling AI products, then investors assume the entire AI sector will do the same.

But that’s not how competitive markets work. In fact, success in a competitive market often comes at the expense of rivals. There can be winners, but not everyone can win.

This fallacy infected the telecom boom of the late 1990s. Just because one broadband provider had profitable demand didn’t mean every fiber-optic network was worth building. Billions were spent laying cables that no one would use for another decade.

Then there’s adverse selection. In insurance, this happens when sick people are more likely to buy health insurance than healthy people, leading to losses for the insurer that doesn’t attract enough healthy customers.

In AI, something similar is unfolding: the heaviest users of AI chatbots and tools – those demanding the most computing resources – are often not paying enough in subscription fees to cover their costs to the LLM provider.

The casual users, who make up the vast majority, may never convert to paying customers. This undermines the very business model these companies are betting on.

What happens when the market discovers this? You get a doom loop: companies overspend on infrastructure, hoping for future demand that never materializes, while investors keep piling in – until someone finally notices the emperor is minimally clothed.

Why Small Businesses, Not Big Tech, May Be the Real Winners

There’s a popular narrative that the so-called “Magnificent 7” (Microsoft, Google, Apple, Amazon, Meta, Nvidia, and Tesla) will capture most of the economic value created by AI.

But history suggests otherwise.

In past tech revolutions, the biggest winners weren’t always the biggest companies. The advent of the internet helped millions of small and mid-sized businesses gain customers and compete globally. Low-cost e-commerce tools like Shopify and advertising platforms like Google and Facebook gave them the reach they never had before.

Similarly, it’s possible that AI’s biggest beneficiaries won’t be the firms building the models, but the dentists, restaurant owners, and local manufacturers who use those tools to accelerate mundane processes and serve customers better.

The irony? While these SMBs (small- and medium-sized businesses) benefit, the AI platforms enabling them may struggle to get paid. If Google’s new Veo video model helps a dentist craft a great local ad, will that dentist pay Google more?

The Myth of Lock-In and the Illusion of Pricing Power

One of the most dangerous assumptions in AI capex is that these platforms will enjoy pricing power – meaning they can charge enough to cover their costs and then some.

But look closely, and this assumption crumbles.

The AI chatbot market looks a lot like perfect competition, a textbook term for markets where many providers offer nearly identical products, and prices fall toward the cost of production.

Unlike the broadband boom of the 2000s, where companies had to rip up streets to lay wires and customers had few broadband alternatives, switching from one AI model to another takes a few clicks.

If you’re a free user of ChatGPT, and it suddenly costs $20 a month to use it at all, but Claude or Gemini is free and just as good, nothing is stopping you from switching.

That’s the nightmare scenario for the AI providers: they’ve built massive infrastructure assuming recurring subscription revenue, but the customer base may have no loyalty and little willingness to pay.

It’s like investing billions into oil wells only to find out your oil sells for $10 a barrel instead of $70.

Where’s the Feedback?

In a healthy economy, production and consumption communicate constantly. If a company builds something useful, customers respond by buying it. If they overbuild, inventories pile up and prices fall, sending a signal to slow down.

AI infrastructure, by contrast, is being built largely on faith. Companies are scaling up compute power without clear signs of sustainable demand. Unlike oil and gas, where prices adjust second-by-second, AI companies operate in a fog. They release tools, collect usage stats, and hope that paid conversions will follow.

But hope is not a business model.

In fact, today’s AI leaders resemble 2006-era banks more than healthy, feedback-driven enterprises. They’re in an unhealthy relationship with venture capital (VC) firms that need higher and higher valuation “marks” to justify their fees.

VC firms don’t want transparency about customer conversion rates, churn, or operating losses that scale with revenue. That would kill the narrative before the VCs can cash out in IPOs to the public market.

China’s Playbook: Focus, Not Fantasy

Meanwhile, China is asking a much more grounded question: How can we use AI to make real-world industries more efficient?

Companies like DeepSeek are building AI tools designed to solve narrow problems in energy, logistics, and manufacturing. That’s closer to how the human brain works – selectively activating regions based on the task at hand. And it’s proving to be more effective than trying to create superintelligence in a box.

While U.S. Big Tech chases lofty visions of artificial general intelligence (AGI) – often undefined and possibly unachievable – China is deploying AI in practical ways that produce results.

To compete, the U.S. must start asking more rigorous questions: What is AI actually for? Who pays for it? And how does it improve the lives of average Americans?

Carl Brown’s Warning: AI Code Is a Maintenance Nightmare

If there’s one place where AI hype is meeting hard reality, it’s software engineering.

Veteran developer Carl Brown, in an interview with Ed Zitron, shredded the idea that large language models (LLMs) will replace human coders anytime soon. He explained how writing code is only a small part of the job. The real work is long-term thinking, fixing bugs, and figuring out what the software should do.

AI tools can crank out lines of code fast. But they often do it sloppily, duplicating functions and ignoring best practices. The result is software that’s harder to maintain, easier to hack, and more prone to crashing.

Brown compared AI code to “vibe coding,” which is typing something into a chatbot and hoping it works. That might be fine for a one-time prototype, but it’s a recipe for disaster in a software program that’s meant to last.

Worse still, managers – who assume AI is miraculous because they hear about it so much on CNBC – don’t understand programming. These managers are using AI to justify layoffs or to demand unrealistic productivity increases from their teams.

This may create a generation of junior engineers who never learn to code properly because they’re relying on tools that don’t understand the long game of software development.

It’s often claimed that software is the most natural market for AI to disrupt. However, Carl Brown’s view, plus dozens of other examples, confirm that in real-world stress tests, AI misses too many things, or hallucinates too often, to be considered a revolutionary technology trend.

So, in the months ahead, we’re likely to see more hints that Big Tech companies are tapping the brakes on Nvidia chip orders. They’ll do this to avoid an accelerating decline in their free cash flows – a trend that’s already looking scary.

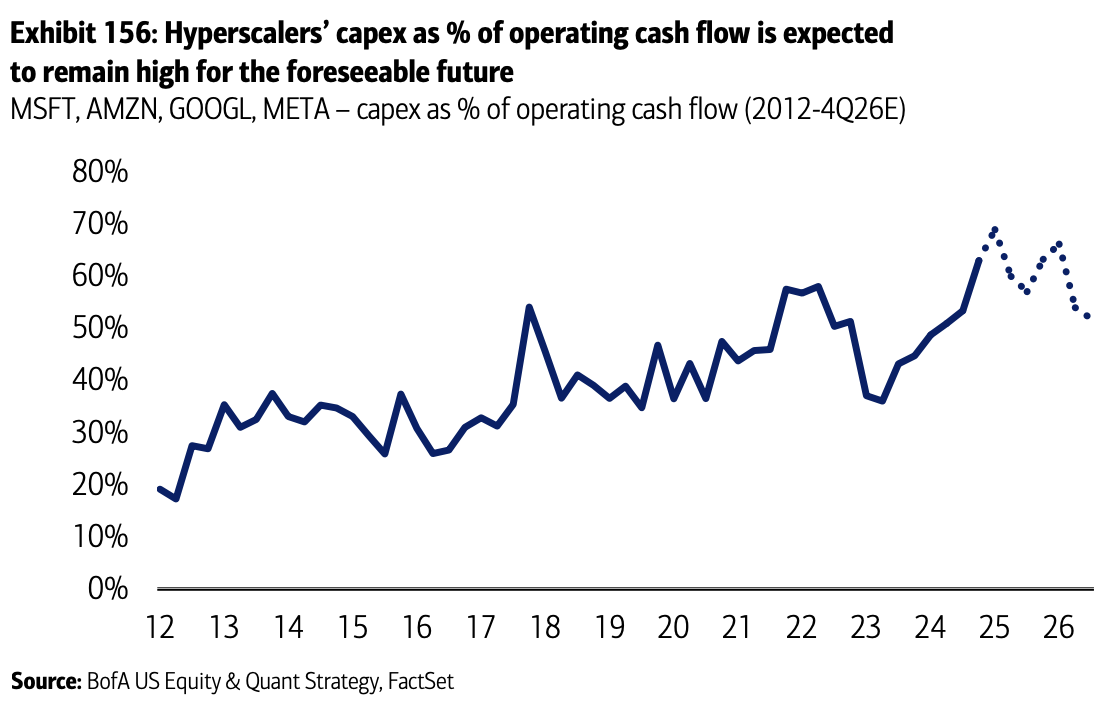

For example, see this projection from BofA Global Research. It shows capital spending as a percentage of operating cash flow at the companies building hyperscale data centers.

A recession or a bust in venture capital funding for AI LLM companies (like OpenAI) could drive operating cash flow lower.

That would make the BofA chart surge higher than they project in the dotted lines. And higher capex relative to cash flow means free cash flow would dive toward zero. To avoid that scenario, hyperscale data center operators would cut AI chip orders.

Editor’s note: Members of Strategic Intelligence Pro can access Dan’s full report, including the stock pick, here.